“If its not worth writing, it’s not worth reading.”

Some of my friends will know I keep saying that line over and over again in the current state of our industry. And given how much I despise ChatGPT’s vernacular and robotic writing style, it has poisoned the well of the written online media that Google right now is presumably feeding Reddit posts to its own AI to make its in-house one more “colloquial” if that makes any sense. Regardless, this is on the broader Cloud-end of the spectrum, what I want to talk about is what Microsoft, Intel, NVIDIA, AMD and possibly Apple and Qualcomm as well in the future.

I want to talk about “AI” on your own PC. I want to talk about Microsoft, Intel and AMD are pushing for AI for the desktop and at its current state, the entire industry is now trapped in this “with the power of AI” but with no actual benefit for the end-user. While the professional landscape may have it, the huge power gap in terms of adoption and performance between NVIDIA and the rest of the industry seemingly puts them in a unique position to leverage this further… so why is Intel and Microsoft the ones pushing the AI PC?

I plan to make this a series of articles so if I miss anything, it’s probably intentional.

What is an AI PC?

An AI PC has a CPU, a GPU and an NPU, each with specific AI acceleration capabilities. – Intel Newsroom

And you can read that again very slowly but pay special attention to the first two parts: a CPU and a GPU. Chances are you already have both of these parts on your computer so congratulations, you practically have an AI PC already. But what makes AI… AI? It varies very, very greatly.

I mentioned Microsoft earlier and they are playing a big hand in this as well. Microsoft currently has their hands tightly-wrapped around OpenAI’s throats and Microsoft Copilot tied to it. And users need to understand that having an AI PC right now is basically being tied to the internet if we’re to go by what Microsoft wants.

As of this moment, there exists multiple tiers of AI. For now let’s follow NVIDIA’s prescribed separation:

- Light AI – NPU

- Medium AI – GPU

- Heavy AI – Cloud

NPU is what Intel and AMD currently have on their hardware. NPU or Neural Processing Unit are specialized hardware made to accelerate machine-learning and AI tasks but unlike GPUs that are far superior in performance and power draw, NPUs right now are more conservative in power draw but so is performance.

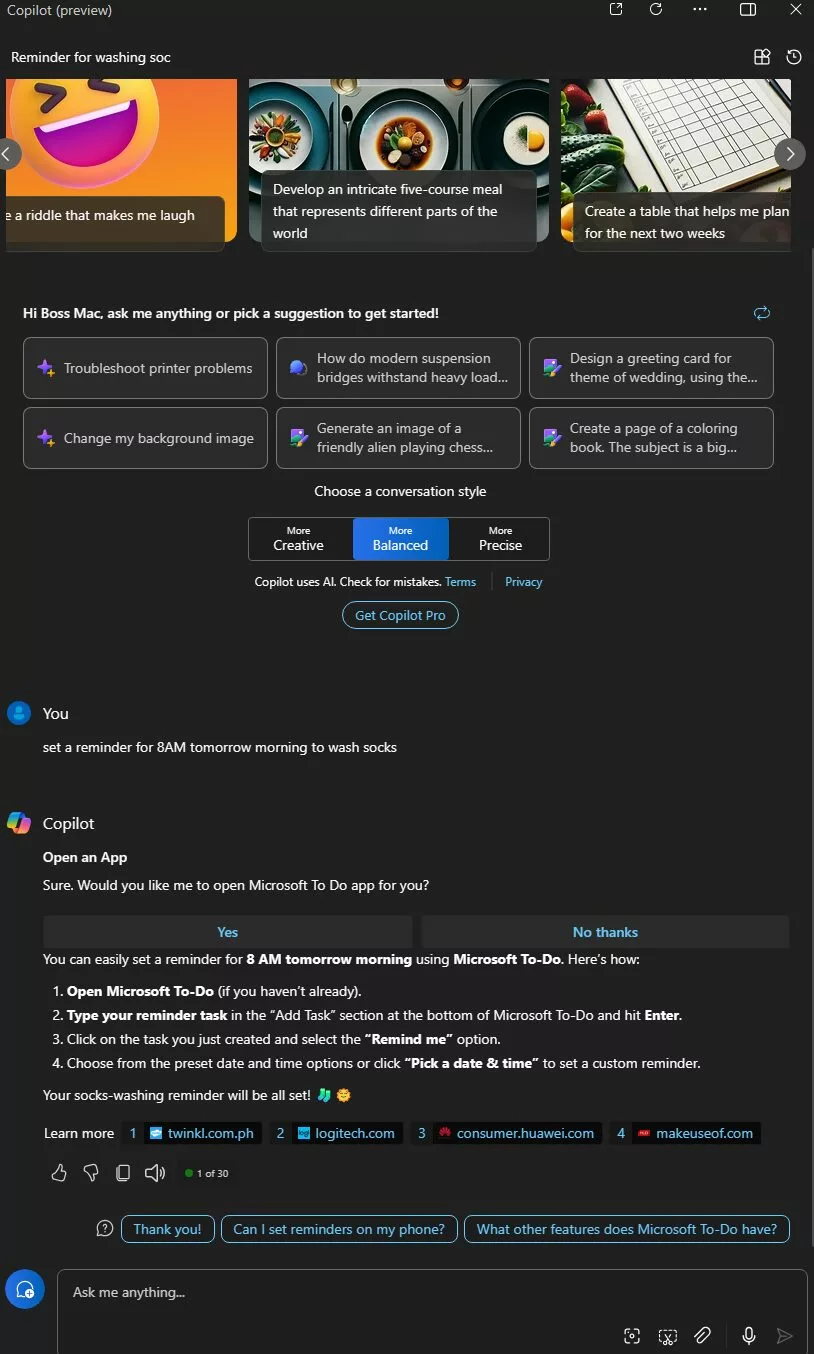

But understanding what NPUs and GPUs do for AI is only a portion of the problem as the bigger issue here is software. Going back to Microsoft’s Copilot, as of this writing (April 10, 2024 11:00 AM Manila/Taipei), Windows 11’s current version of Copilot is incapable of doing basic assistive things. For example, instead of setting a reminder, it shows you a tutorial so you can do it instead:

If you have an Android or iPhone on hand right now, you can tell Siri or Google to set a reminder for 8am to wash socks and I’m pretty confident it can do so on your phone.

This is what many people would expect AI to do and its actually how Microsoft advertises how its Copilot works. But apparently that’s forward-looking and this is expected to be an evolution of what Copilot can do as the current version is only a “preview”.

And just like Copilot, similar applications like Adobe’s Generative Fill or Neural Filters or many other AI services are predominantly ran on cloud infrastructure, an industry heavily dominated by NVIDIA. So much so that a majority of the current AI landscape is NVIDIA CUDA-optimized so many of the local, offline models are preferably ran on GPUs.

Intel and AMD both take the efficiency route in this case and argues that even if you can prompt a 512×512 image in Stable Diffusion and create it in 11 seconds on an RTX 4080 for mobile, that thing draws 100W+ of power versus their NPUs that can do it 4x slower but do it for only 6W or so. This works great for laptops which Intel’s Meteor Lake is currently on but for AMD’s desktop NPUs, it absolutely makes no sense. AMD is likely to release a refreshed laptop line for COMPUTEX 2024 featuring AI hardware but with only 2 months out, there still is no sign of a killer app on the horizon for NPU AI.

So what can I do with AI?

CES 2024 brought us a good batch of Intel Core and Core Ultra processors featuring their NPUs and reviews have already come out with the majority of whom struggling to put NPU performance on a pedestal in hopes of securing that marketing sponsorship. Agends aside, there is literally a plethora of AI software out there but the challenge here is what would fit NPUs Light AI duties as most local models are heavily task-based.

At this point in time, many of the existing AI/ML-accelerated apps are being ported for use with NPUs. A good example of light-use applications are audio noise removal, background removal/replacement, and teleconferencing filtering. The same AI filters can be found on audio editing software as well.

I mentioned Stable Diffusion earlier and this is a polariizng piece of software as many artists are against it but the online media have gravitated to it to remove the need for bespoke images for articles. My personal opinion aside, Stable Diffusion is also already ported to run on NPU with GIMP and Filmora already have some NPU-accelerated features.

Keyword is “accelerated”. Using the NPU *could* be more efficient but at its current state, its probably not going to be any faster and if we’re dealing with work, chances are you want it to be faster. There are still usecases here like field work particularly in journalism where users have ultralight laptops with only an IGP and an NPU.

Outside of the productivity field, NPUs can be leveraged other tasks some of which are not particularly exposed as much as generative AI or LLMs. Theoretical applications like games can offload game AI logic to NPUs but all of this revolves arounds it current capabilities.

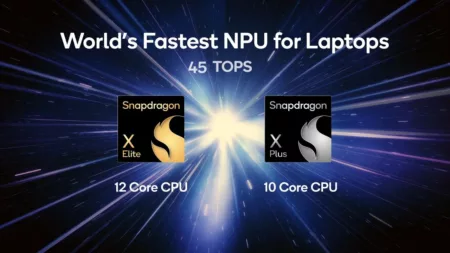

Intel suggests a 40TOPS (trillions of operations per second) as the target baseline for local AI, something we’ve yet to confirm but it seems both Intel and AMD and their NPUs are currently incapable of reaching such numbers. That should change once Lunar Lake arrives where Intel touts a 100+ TOPS on the platform.

At the end of the day, Copilot needs to eventually turn to local computation especially with concerns of security potentially slowing down its adoption on business settings.

Benchmarking AI Performance

The IT media is struggling to figure out how to benchmark AI hardware as we speak. While UL Benchmarks’ Procyon now has Stable Diffusion numbers, the score is still arbitrary and based off image generation. We still need to address performance of other applications like LLM, NLP, generative AI in general, etc. all of these are currently still in exploratory territories.

Internally, I’ve debated about which software to base performance off on and I’ve boiled it down to what’s actually usable in the market right now. Sad to say, these don’t run on NPUs but I’ve yet to fully confirm that which should come out in a review or two, soon. For now, I’m focusing on the system itself for performance. For NPUs though, we’re targetting efficiency.

That said, I will be presenting NPU performance for efficiency numbers with some performance numbers that are actually usable. While I can go out and publish SD1.5 numbers and call it a day, is it worth it for someone to wait 4x longer than a GPU-accelerated laptop, than just go on Google, Midjourney or DALL-E to render a target image.

What I currently am looking at for AI Compute testing and AI Performance Benchmarking are the following:

- Topaz Video AI – upscaling a video from 1080p to 4K60, currently only runs off the CPU or GPU. A video/content thief tool-of-the-trade for uploads. They won’t tell you its Topaz but you know they use it.

- Stable Diffusion – stock setup straight of their repos for NVIDIA and the Intel and AMD forks. We use a uniform prompt to generate a 10-image batch of images and compute for images for per minute. I’ll be honest, I don’t like image generation but it has its uses especially for compositing and layout where you just need an asset to complete a work so regardless of my opposition for how its currently being used, SD will be our image generation test tool. Plus most companies are not fitting it as part of their software suites just to bolster the “AI” feature.

And here’s a few I’m exploring. I have the software but given they’re not that popular yet, its still tentative:

- Image and Video library cataloguing and identification – this is a software class that tags videos, images and audio for what they are making sorting, archiving and retrieving assets easier when needed. Right now for desktop I use Nero AI for this while my QNAP NAS has it built-in thru one of its apps.

- Text-to-voice / real-time translation – something I’m really interested in is real-time trranslation and transcribing and then having that transcript be read in a natural tone bearing the speaker’s original intent and delivery. I’ve yet to discover software that does this locally but some of the cloud services that do these tasks separately, have shown very promising results.

- AI NPC – Inworld’s AI NPC concept is a groundbreaking reveal and NVIDIA took it further with ACE but Inworld’s current implementation focuses more on interaction. AI NPC decision-making and other gameplay simulations should be a lighter workload for local processing and I’ve yet to see an implementation but something I’m very interested in doing.

Image recognition seems basic but training in-general is the more taxing part. But after training and optimization, should the model be capable of running on most hardware, I’m interested in including that.

I’m currently consulting with the lead of this project to expand this further.

Thoughts for Now

I chosen the most generic stock photo I could find in the first page of Envato when searching for AI. I’m that lazy. But I’m also very passionate about this industry and I feel we’re diving way too early into putting AI into everything despite having no such tools to begin with. So to Intel and AMD, ease off the gas for a bit and let the consumers breathe. We know the pandemic sales really inflated your company numbers but that boat has long sailed past us and the metaverse didn’t really pick-up so now everyone’s on the up and up with AI.

But compared to VR or the metaverse, AI certainly has its uses and ChatGPT is the benchmark for how significant AI can be. Even a fraction of what ChatGPT can do is an immense leap in computing especially when it can run off a 15W processor at similar response times.

My sentiments are mixed: I hate how rushed this industry is making “AI” feel for the general consumer but just like the pandemic, forcing something may not be a bad thing. After all, video games and PC sales have benefited the most after the 2020 pandemic but AI can take advantage of this situation if only the hardware vendors are more supportive of the developer side of things.

In my next article, I’m planning to go through the software side of things as well as the requirement side and talk about how expansive the entire landscape of everything “AI”, potentially even discuss AGI and how far off we are from this eventuality and its benefits and repercussions.

If you’ve reached this point in the article, thank you. There’s no surprise, this is ChatGPT-generated, this is my daily brainfart turned into an article. After all, I’m the kid that wrote an AI apocalypse story for his 1st-year English subject project back in high school, so I’ve longed been fascinated by this topic.

What’s that? Should you get an AI-powered PC? As always, it depends on what you’ll be doing. Hit me up in the comments if you need any guidance. Or follow Back2Gaming for gaming and PC news and reviews

6 Comments

may ai cores na???!!!

core series1 tsaka ryzen 8000G amfs ilan buwan na haha

So… Parang peperahan ka lang for a useless AI shit?

Ran Bindoy may CPU ka prin naman ehehe

Though wala pa ako masyado nakikita gumagamit ng AI cores ng mga CPU (or still under powered pa) kaya puro GPU/RAM pa din gamit ng mga apps.

Yan din talaga ineexplore ko par kaya need your insights sa next articles about this. Just doing pre-testing using avail NPUs muna para may baseline tayo.