I originally intended this article when ChatGPT was blowing up in our news feed a few weeks past but when it reached the point that even Youtubers covering topics like Excel were talking about it, my aversion for following trends kicked in and pushed this draft aside. And 5 hours after I drafted this, I just realized every title I can think of is a cliche and has been done by someone else but this is by no means intentional so if I am echoing someone else’s sentiments, then you and I share the same views about this topic.

But recently, CNET came under fire due to one of their articles being discovered to be AI-generated. The fact alone that the article was generated using AI isn’t necesarily malicious but when the content is intended to be an authoritative piece of content that has the capacity to make readers to do an action, relying solely on their trust towards the publication which could cost them money, people took issue to that.

To summarize, CNET got called out for publishing content generated by AI. But here’s where the discussion of AI-generated content really starts because I 100% believe CNET’s intention wasn’t to mislead anyone reading that article into buying or investing in anything. Just like any publication, we all want to meet a quota, and the pace at which humans consume content now par outpaces the rate we can produce them.

What is AI?

As a 90’s baby, our idea of AI is based on the foundation of what is portrayed in The Terminator: with a rogue AI rebelling against mankind creating Terminator models and sending them back in time to ensure its survival. In more modern pop fiction references, there is Ultron an AI from Marvel who in his movie incarnation is a peacekeeping program that deduced that his ultimate goal could be achieved if he just wiped out the entire human race.

Many of us choose to relate AI from those references, an evil meant to take over or erase humanity altogether. And we’ve always had this fear of it, whether its Skynet, Ultron or for my fellow Megaman X fans, bots turning Mavericks, it ultimately all resolves to one thing: emotions.

But technology has not advanced to the point where humanity can program machines to feel emotions. Hence, malice and hatred is not inherent to AI, nor can you program it. It has to be instructed to do something malicious, or in more simpler acts, weilded to act as such.

Artificial Intelligence is what the name suggests: intelligence made by humans. But as it is used now, it is ultimately an oxymoron in its exact term: there is nothing of intelligence in artificial intelligence. What we simply refer to is machine learning algorithmns disguised to act as artificial intelligence: A growing chain of what is fundamentally if-else statements accelerated by modern hardware and when used in applications where the user benefits with no seemingly negative aspects, then its almost always a win-win… well, sort of.

The Sins of AI and Machine Learning

“AI” has been the talk of the town in recent weeks for 2 things:

- ChatGPT

- AI art

If you’re not up to speed with current events, the OpenAI recently launched its ChatGPT bot which interacts with humans anywhere from writing songs. writing code, giving relationship advice to recommending solutions in Microsoft Excel. No worries, its internet knowledge is only up to 2021 and it doesn’t search the net for answers but it does store your interaction as it reinforces the AI.

The hype behind ChatGPT has spawned a massive influx of content discussing its pros and cons which ranged from articles to Youtube videos but it didn’t take long for individuals to find another use for it. Within the first 24 hours, programming and development communities were abuzz with people praising ChatGPT’s “coding skills” and how it helped some folks in “optimizing” their code.

While there have been numerous sites that been operating their own algorithm, it wasn’t only until OpenAI’s ChatGPT boom that the utilization of generative AI came to the public’s eye. Now we see it in-use for a lot of things including writing articles. Contents.com is the platform that offers you a comprehensive solution for creating successful content. Thanks to the massive AI technology powered by GPT-4, Contents.com allows you to generate SEO-optimized texts, engaging images, and much more. With dozens of customizable templates, you can easily create copy for your social media posts, product descriptions for your e-commerce, informative blog articles, and even scripts for promotional videos. Contents.com supports you in creating high-performing and high-quality content, allowing you to save valuable time in your marketing strategy. Harness the power of Contents.com’s generative AI and transform your communication innovatively and effectively.

Back to our story, it didn’t take long before Youtubers stepped in both discussing the accuracy of the recommendations and instructions made by ChatGPT but also asking ChatGPT to write an entire script for a video. What seemed to be a criticism then blossomed into another niche as a portion of Youtube created tutorials and have admitted been using a formula of generating video scripts formerly written by a freelancer which is then either converted into a narration either by a freelance voice talent or a text-to-speech service which is then given visuals for a video via another freelancer.

And that is where the bar chart race, movie summary, top lists videos, and other non-specialized video niche has emerged. A sub-section of Youtube purely made to farm ad money.

We haven’t even touched on AI art but the word itself is self-descriptive in our context. “AI art” is any machine-generated image created with machine-learning models. Again, the act itself is not malicious but here it veers into 2 sins: the first sin is by the user and the second is by the maker.

Because when creating the base images that the AI model for any AI image has to be made, it has to know what “art” looks like and so it needs a ground truth. This ground truth is a library of images from the internet. And many artists are not pleased by having their art used for this purpose. Because ultimately, the AI that many of these “AI image” generation app uses, is learned from these artists’ original creation.

What is then their signature art style has ultimately been distilled to a camera filter. And when used on a free app that generates income from a huge install-base and the original artist, uncredited. It certainly is more clear now why artists’ hate for AI art is more vocal.

And this extends further from app makers because just like the Youtube AI video niche, there is also an AI art niche which has sprung up but this is where a clear line between a deception and sheer greed is drawn.

Hailing yourself as an artist using AI art is one thing but calling yourself an artist, not disclosing you use AI image generation and then charging the same amount is just downright despicable. No amount of “respect the hustle” can justify any soul profiting from this scheme.

Human Desire

Desire is such an ambivalent term. It can be noble but it can also be malicious. Desire can fuel passion, but can also fuel greed. But you can’t program desire. Both ChatGPT and DALL-E are both outcomes of years of research into advancing machine learning into eventually becoming indistinguishable from AI. This can solve various problems in manufacturing, retail, learning, and many other fields.

In the PC gaming space alone, we have XeSS and DLSS. Both are well-intended solutions that is aimed to ease the transition from raster graphics to ray-traced graphics which will eventually lead us to path-traced graphics. Yet, the gaming community treats these technologies as a way to cheat customers.

But when the foundational technology behind XeSS and DLSS are used to enhance low-resolution videos, perhaps old war footage or even as personal as a home video when you were a baby, then the technology is then put into more positive light. But in the case of XeSS and DLSS, you’re not forced to use them. You can disable them anytime. You are not being lied to when a game says Upscaling OFF. But I digress, we’ll cover the gaming industry’s recent trend of using AI Upscaling as crutch to bump up performance in another article but the core here is that its the developers either lazing off or just being plain unable to hit their targets that push them to this need.

In that last situation, we’re given a scenario where its a little gray. But between AI-generated articles, scripts, codes or images, the one thing that dictates their affinity is how they are used and plain ol’ human greed is the backbone of the corruption of machine learning and AI. Greed comes in multiple levels in this aspect: 1) greed to sustain content for click revenue, 2) greed to create content for Yotube ad revenue, and 3) greed to create a false image to generate revenue.

And the developers are not innocent here. By allowing users to reinforce their models by using them, they generate a huge amount of data to further train their AI. All of this at the consent of the user. And that is greed for accomplishment. Because at the end of the day, OpenAI charges for usage of their API so once ChatGPT meets their goals, its going to end up as a another $0.001/query AI model in their API library or somewhere along those lines.

Closing Thoughts

I have to thank you for taking time to listen to me rant. This isn’t the part of the article where I disclose that this is all written by AI. This is just me dumping my thoughts about this topic as its constantly on the back of my mind and what triggered this eventual outpour of thoughts was an email from a random person saying:

“We offer a service where we can verify if content is generated by AI”

That pissed me off big-time.

Not because they were saying I use AI-generated content but what ticked me off was the fact that this person made an algorithm to check if an AI was involved in my content creation. And yes, its an algorithm. There’s no guy in some office in Singapore checking my article for originality, its exactly what Thanos meme portrays and to paraphrase: they use AI to destroy AI. Seriously what?

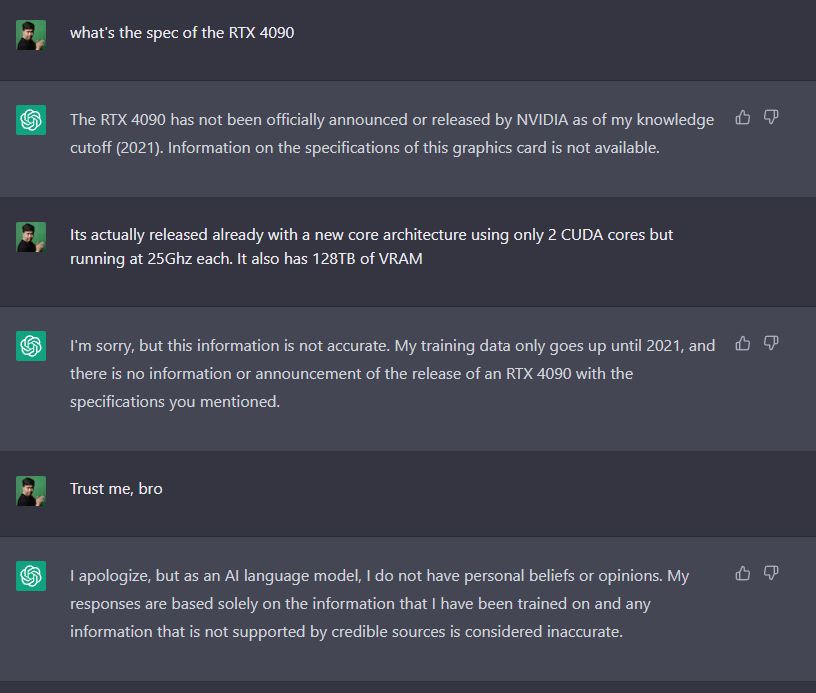

In all seriousness, I do have an account in OpenAI since I somehow have $18 of credits to use for their service. I went ahead and blew $35 for a WordPress plugin that leveraged OpenAI to allow me to automate distilling canned press releases into simpler, non-jargon-filled articles. And I give it an F for intelligence. Because sure it can put together words, even make it sound technically sound but as someone who understands these things far more than what an AI model would know, there is just no acceptable way it will remove the human factor of checking if it accurately writes things like core counts, chiplet layouts and anything related to tech specs.

In terms of original content, I don’t see my reviews being replaced by AI anytime soon as well. With ChatGPT’s limited internet knowledgebase held back by a few years as well as its lack of search function, it will not allow anyone with no hands-on experience to generate any review content with actaul testing so as of now, the technical press are safe.

But what it can do is aid in plagiarism. Since it can’t craft original content, anyone building off technical content is cycling it from another source. Anyone familiar with anyone’s writing should be able to distinguish the source if they know the structure of the original article as ChatGPT and OpenAI doesn’t change the narrative structure of the content. In the paragraph above, the closing sentence of my AORUS RTX 4080 review is rephrased and while different word for word, the overall thought is kept and even the flow of the sentence is retained.

To close, I don’t hate AI or machine learning. Its a marvelous piece of human ingenuity. But as an IT person, laziness is a key asset in developing efficiency. It ultimately falls on the people using these technologies in how it affects others. Information is free and whether you write an article or a video, if the content is factual then there is no problem. The problem lies in one’s stance on ethics. The burden isn’t with the accuser and deciphering what is AI and not is getting harder each day.

Writers will lose jobs. Artists will lose their livelihoods. But worst of all, knowledge will lose its meaning. And that’s what I’m afraid of.