Ray tracing has primarily been used in recent game development for lighting and reflection systems despite both Sony and NVIDIA suggesting in some way that they have the capabilities to utilize ray tracing for audio. So far, no game or application has utilized this to an extent that its used as a marketing feature. However, Vericidium—a developer working on a proprietary engine—has extended the ray tracing concept to handle real-time audio simulation. This plugin, currently in the testing phase, brings ray-traced audio to Unreal Engine and Godot, offering improved spatial fidelity and a sound visualization system for deaf players.

The video above explains visually how the concept works and how Vericidium is making it possible but but he’s asking for help from fellow game devs to provide further feedback for improvements.

The Limitations of Conventional Game Audio

In typical 3D game audio systems, sound spatialization is handled via binaural audio using HRTFs (Head-Related Transfer Functions). These allow stereo audio to simulate directional cues by manipulating how sound reaches each ear. While this effectively simulates directionality on a flat axis, it is limited by a lack of environmental interaction.

Most engines use room-based audio occlusion, where predefined audio zones determine how much sound is muffled or altered by walls and other geometry. This method struggles with dynamically changing environments, non-standard room layouts, and destructible structures.

Ray-Traced Audio Concept

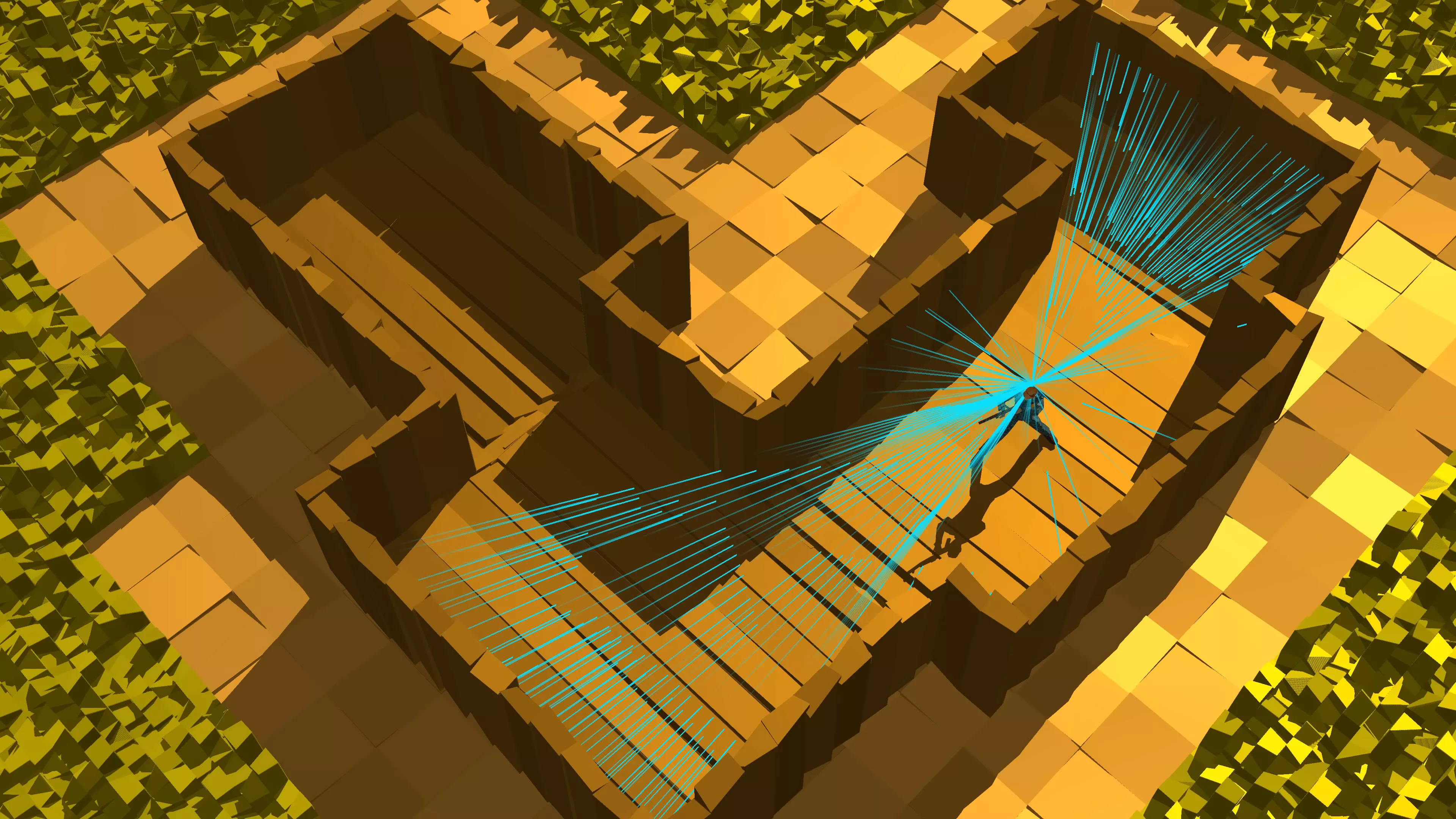

Vericidium’s approach replaces static audio zones with a ray-based sampling system. Rays are fired outward from the player’s position (or ears), and these rays interact with world geometry to determine sound clarity, occlusion, reflection, and transmission.

The system includes four major ray types:

-

Green rays – Trace direct paths to sound sources, determining clarity and strength of direct audio.

-

Blue rays – Reflective rays that return to the player to simulate echo behavior.

-

Orange rays – Penetration rays that pass through solid geometry to determine sound energy loss due to material thickness.

-

Yellow rays – Directional average paths used to determine the orientation of ambient or indirect sounds such as rain.

All rays operate against a voxelized scene, which improves computational performance compared to triangle-mesh-based raycasting.

Applications and Features

-

Occlusion Handling

-

Sound clarity is calculated based on the number of green rays reaching a source.

-

Wall thickness is factored in by measuring how long orange rays spend inside solid material, reducing energy accordingly.

-

-

Reflection and Echo

-

Echo behavior is generated by measuring the distance and return time of blue rays.

-

The amount of returned blue rays determines echo volume.

-

Partial occlusion (e.g., cluttered environments) reduces ray returns, decreasing echo accordingly.

-

-

Indoor vs. Outdoor State Handling

-

Escaping rays are tracked to determine whether a player is in an enclosed or open environment.

-

Outdoor ambiance (e.g., rain) is shaped by the average direction of these escaping rays.

-

If a player is indoors with no direct path to the outside, indirect reflections (via yellow rays) are used to approximate the correct ambient sound direction.

-

-

Material Interaction

-

The system accounts for destructible and movable geometry.

-

Changes in wall shape, thickness, or position are automatically recalculated via updated ray sampling.

-

-

Weather and Environmental Simulation

-

Environmental sounds (e.g., thunder, rain) shift in direction and intensity based on the real-time sampling of gaps or openings in geometry.

-

When in proximity to windows or open walls, players receive more direct ambient audio from those openings.

-

-

Permeation and Attenuation

-

Longer travel time inside solids results in increased attenuation.

-

Attenuation values are derived from energy decay of orange rays, allowing for more realistic muffling effects in multi-layered or thicker structures.

-

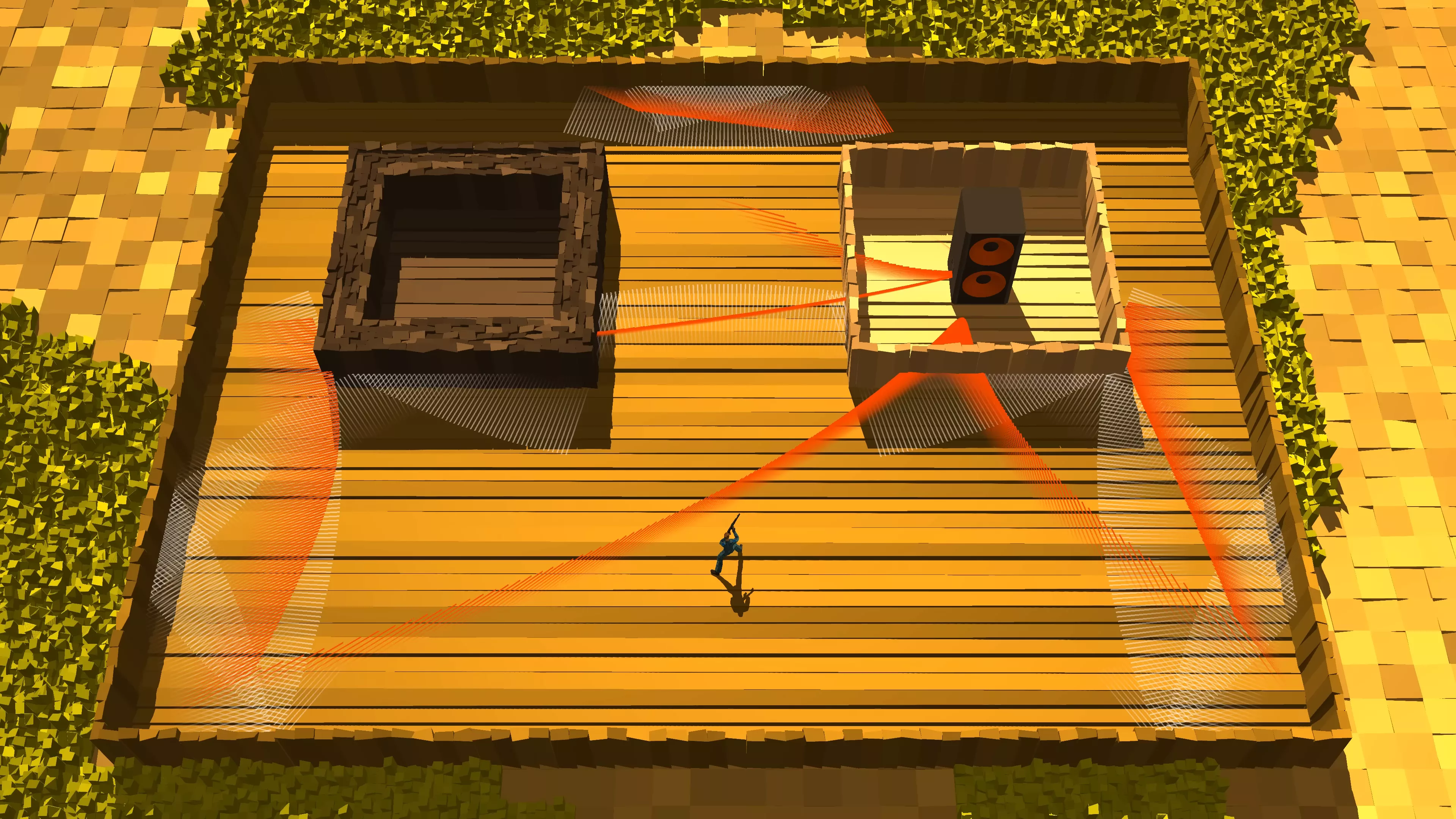

Sound Visualization for Deaf Players

The plugin includes a system for visualizing sound sources by reversing the ray origin. Instead of firing rays from the player, rays are cast outward from active sound sources, and impact points are marked with visual indicators (dots or particles) on surfaces.

Key features:

-

Dots appear on surfaces struck by rays from sound sources.

-

Dots update in real time as the emitter moves or makes noise.

-

Size of dots is proportional to volume or propagation radius.

-

Dots can be color-coded based on sound type (e.g., gunfire, footsteps).

-

Shapes may vary to support colorblind users.

-

Dots disappear when the sound stops.

This method provides a visual field of “sound propagation” for non-hearing users and can be implemented as an overlay or integrated into HUD systems.

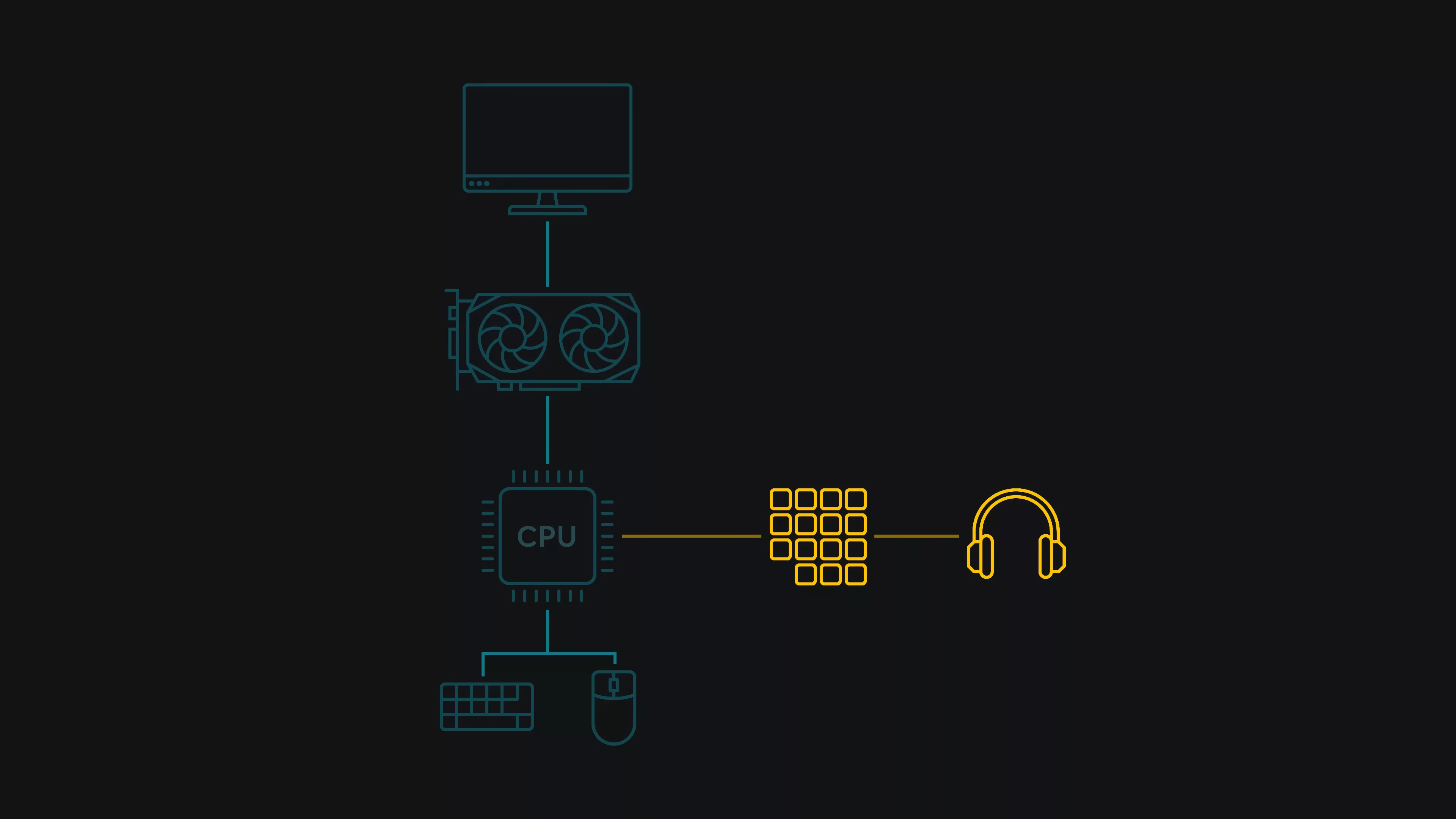

Performance and Hardware Considerations

Despite using ray tracing, the system does not require GPU acceleration (RTX, DXR, etc.). All audio ray calculations are performed on the CPU, specifically on background threads to avoid interrupting the game’s render or input cycles.

-

Scene geometry is converted into a voxel grid for efficient ray intersection.

-

The grid resolution is configurable, allowing developers to trade precision for performance.

-

Designed to function on a wide range of hardware including low-end systems.

-

Does not rely on any proprietary hardware features.

This CPU-based design ensures wide compatibility and avoids adding load to GPU-based render pipelines.

Implementation and Availability

The plugin is still in the testing phase, with the developer seeking feedback from the community. It is planned as a paid plugin for Unreal Engine and Godot.

The underlying code, animations, and samples are partially available through Vericidium’s YouTube channel and linked repositories. Developers interested in participating in the testing program are encouraged to contact the developer directly.

Vericidium’s plugin applies ray tracing concepts to audio in a way that addresses multiple longstanding challenges in game sound design. It offers a scalable, CPU-friendly method for simulating occlusion, echo, and environmental audio behavior, and adds a configurable visualization feature for accessibility use cases. Its flexibility and engine compatibility make it a candidate for further adoption, pending continued testing and optimization.