With the boom in AI LLMs or large-language models, you’ll be familiar with at least ChatGPT and Windows CoPilot, both of which are cloud-based meaning it gets sent to OpenAI or Microsoft’s servers where it gets used as they please. While security and privacy aren’t inherently the issue here, its availability which is primarily the concern and not everyone may have or want to have access to the service.

Regardless, NVIDIA has been the backbone of these LLMs, built on top of large A100 or H100 HPC’s, NVIDIA’s is largely responsive for ChatGPT whipping up a response for you. Previewed during CES, Chat with RTX is an offline or local chatbot running off GeForce RTX graphics cards, well at least an RTX 30 series, which allows users to run their own chatbot locally and use their own data without anything going to the cloud.

Download Chat with RTXEmpowering Personalization with Advanced AI

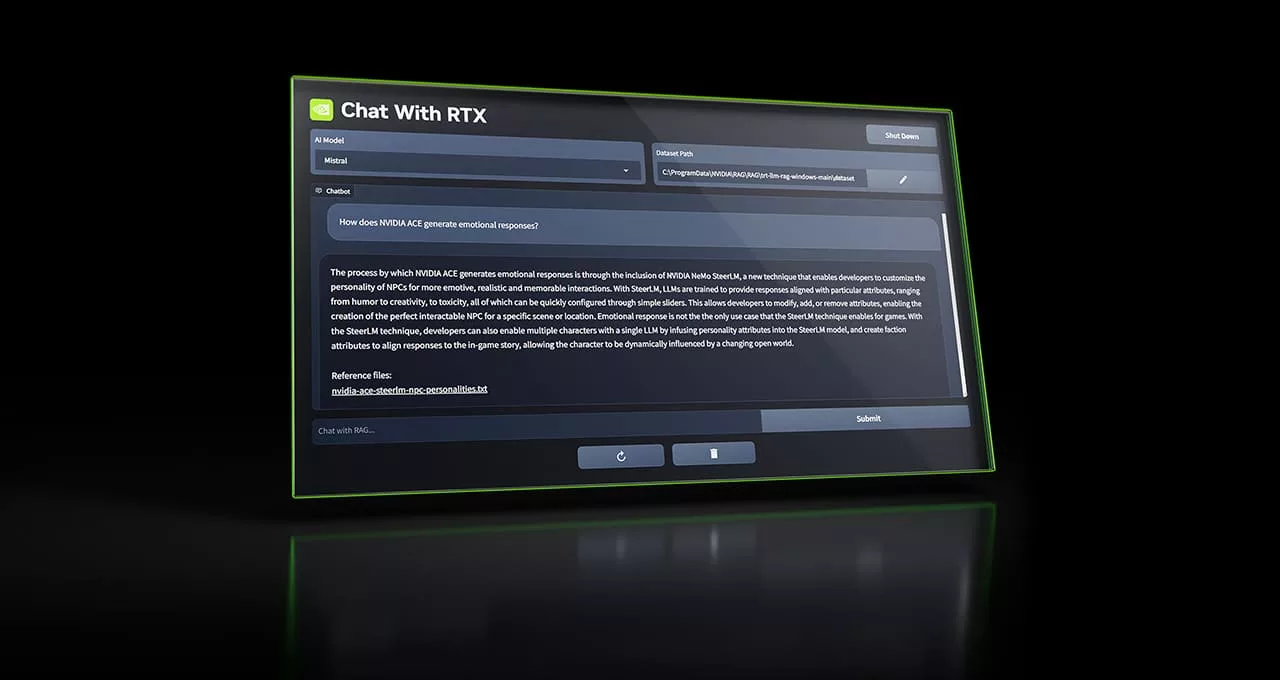

Chat with RTX uses retrieval-augmented generation or RAG alongside NVIDIA’s TensorRT-LLM software and RTX acceleration which deliver a personalize, generative AI experience on your local GeForce-powered Windows PCs. This allows users to connect local files and use them as datasets to open-source large language models like Mistral or Llama2, facilitating quick and contextually relevant responses to queries.

This means no more tedious searches through notes or documents. Users can simply ask, for instance, “What was the restaurant my partner recommended while in Las Vegas?” and receive the answer, with context, by scanning designated local files. Yes, it won’t browse your entire PC but be careful what you dump inside its folder.

Broad File Support and YouTube Integration

The tool is designed to support a wide array of file formats, including .txt, .pdf, .doc/.docx, and .xml. By pointing the application at a folder containing these files, it can swiftly load them into its library. Additionally, Chat with RTX can incorporate knowledge from YouTube videos and playlists into its database, adding to the chatbot’s responses with content from favorite influencers or educational resources. It uses the closed captions file to have an idea of the content.

Privacy and Speed with Local Processing

A key advantage of Chat with RTX’is its local operation on Windows RTX PCs and workstations, ensuring quick results without compromising user data privacy. Unlike cloud-based solutions, sensitive data processed by Chat with RTX remains on the device, eliminating the need for internet connectivity or third-party data sharing.

Requirements and Developer Opportunities

Chat with RTX is compatible with Windows 10 or 11 and requires the latest NVIDIA GPU drivers, in addition to a GeForce RTX 30 Series GPU (or higher) with a minimum of 8GB of VRAM. NVIDIA also highlights the potential for developers to accelerate Large Language Models (LLMs) with RTX GPUs through the TensorRT-LLM RAG developer reference project on GitHub. This opens new avenues for creating and deploying RAG-based applications optimized for RTX.

3 Comments

kaya naman now stable diffusion

wow thanks sa info

check mo automatic1111 par, easy install kung naka nviida ka